GPU No Longer Working in RStudio Server with Tensorflow-GPU for AWS - Machine Learning and Modeling - Posit Community

Best Deep Learning NVIDIA GPU Server in 2022 2023 – 8x water-cooled NVIDIA H100, A100, A6000, 6000 Ada, RTX 4090, Quadro RTX 8000 GPUs and dual AMD Epyc processors. In Stock. Customize and buy now

Use an AMD GPU for your Mac to accelerate Deeplearning in Keras | by Daniel Deutsch | Towards Data Science

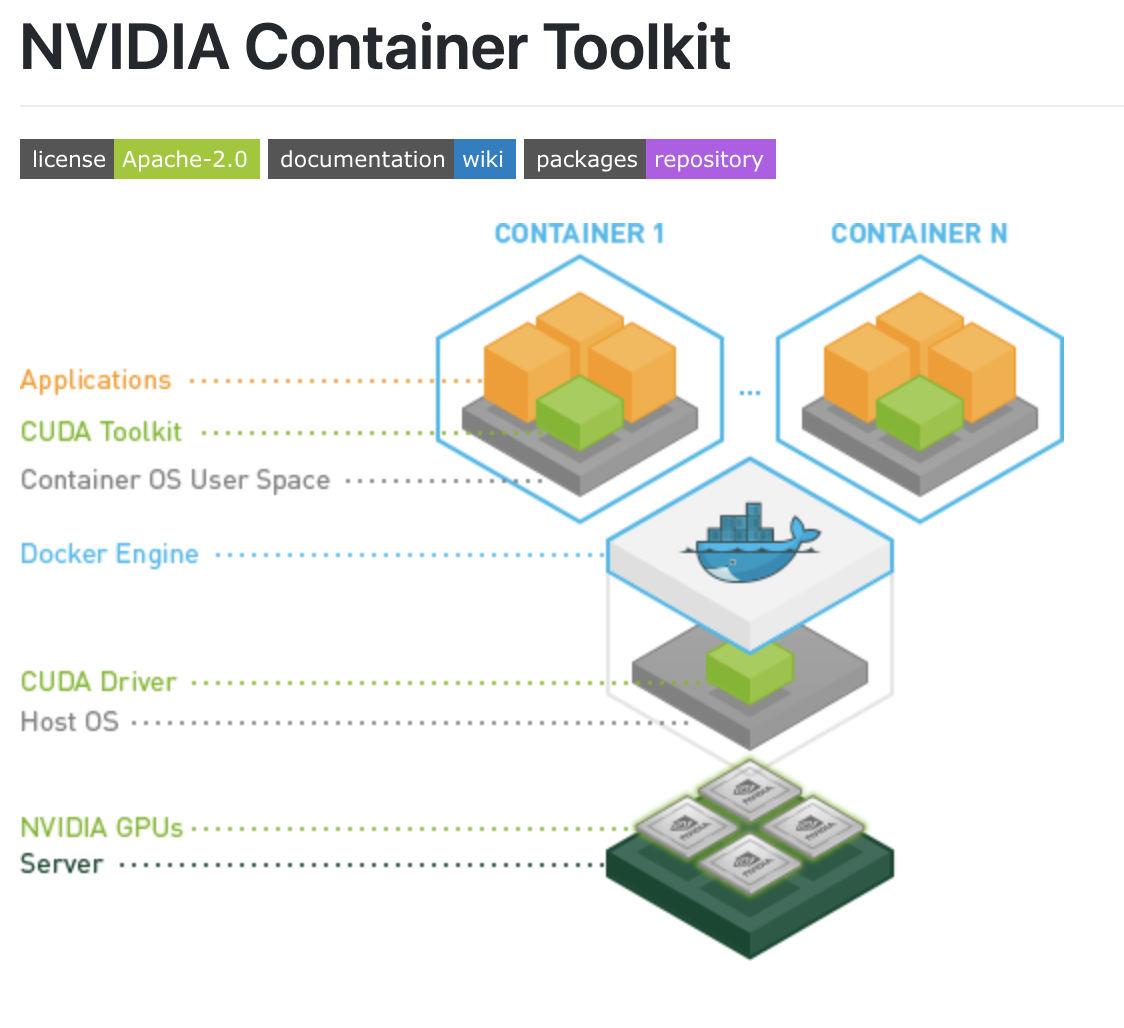

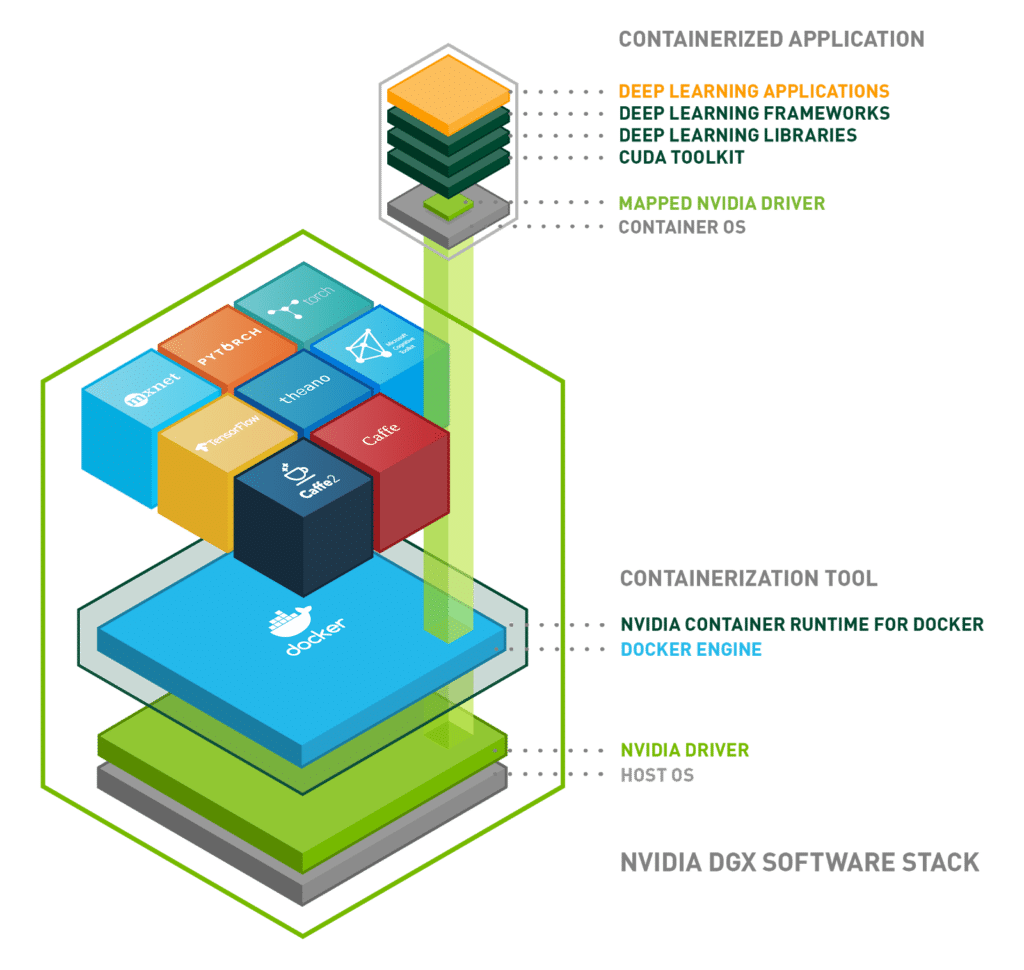

Building a Scaleable Deep Learning Serving Environment for Keras Models Using NVIDIA TensorRT Server and Google Cloud

Google Colab Free GPU Tutorial. Now you can develop deep learning… | by fuat | Deep Learning Turkey | Medium

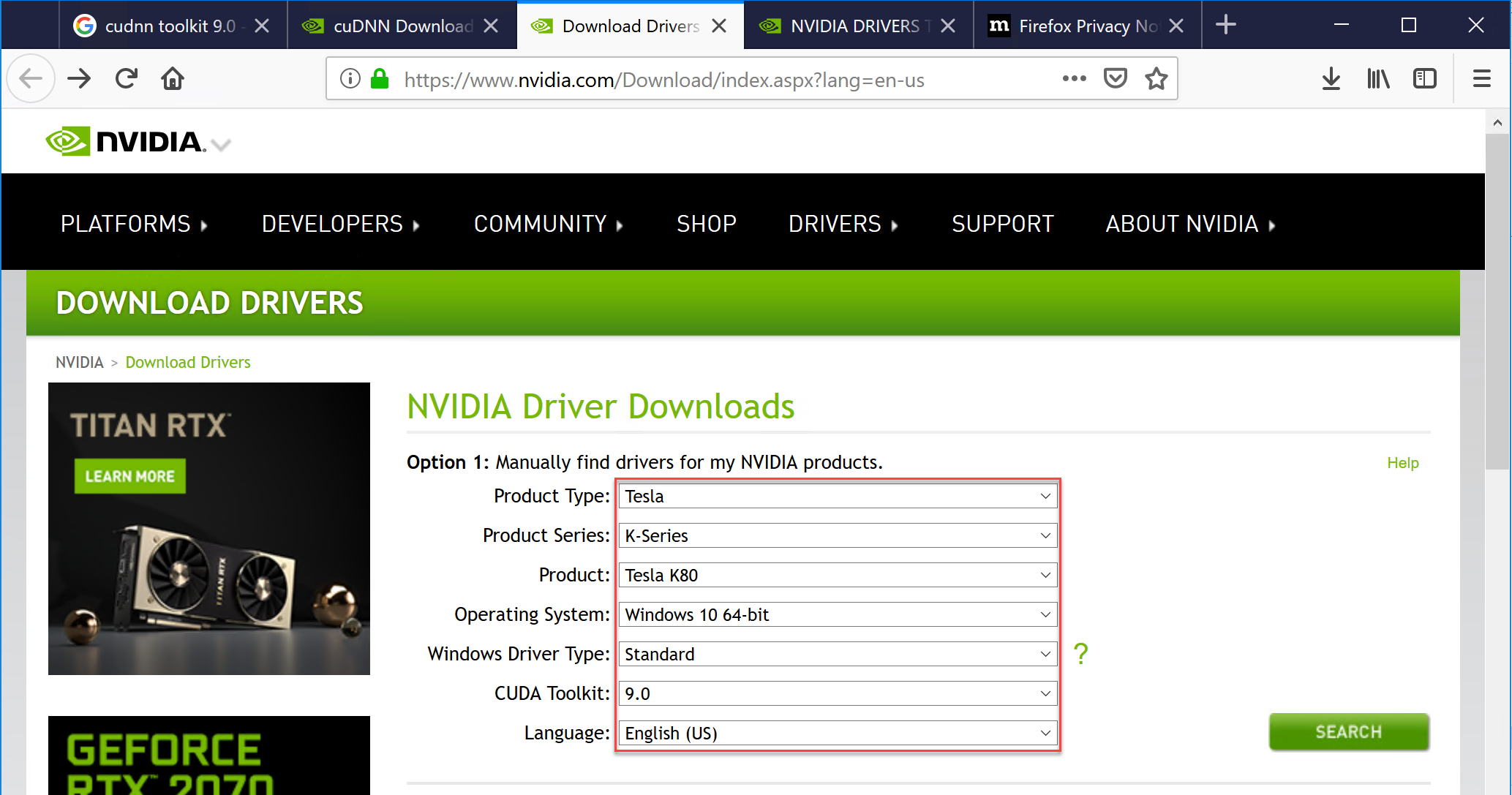

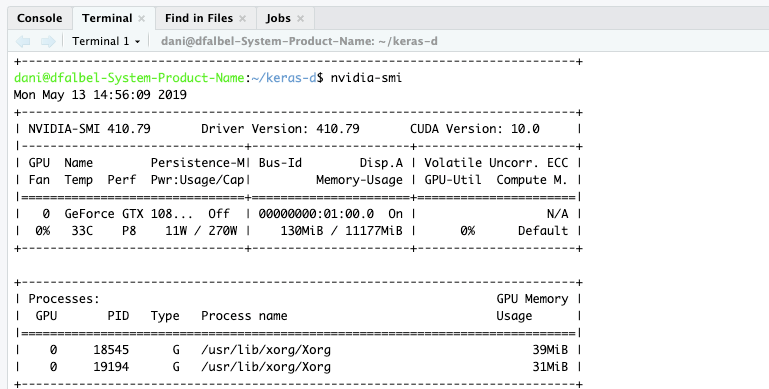

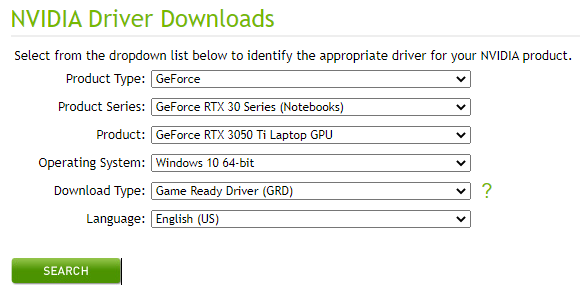

Setting up a Deep Learning Workplace with an NVIDIA Graphics Card (GPU) — for Windows OS | by Rukshan Pramoditha | Data Science 365 | Medium

Building a scaleable Deep Learning Serving Environment for Keras models using NVIDIA TensorRT Server and Google Cloud – R-Craft

Setting up a Deep Learning Workplace with an NVIDIA Graphics Card (GPU) — for Windows OS | by Rukshan Pramoditha | Data Science 365 | Medium

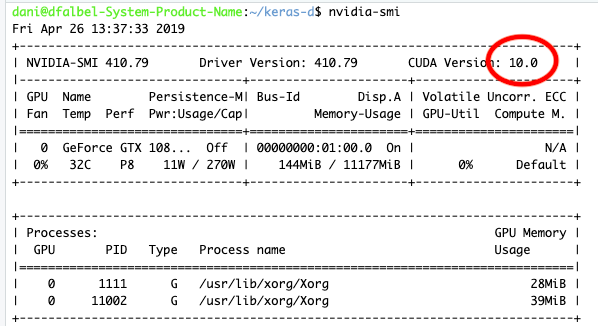

How to Set Up Nvidia GPU-Enabled Deep Learning Development Environment with Python, Keras and TensorFlow

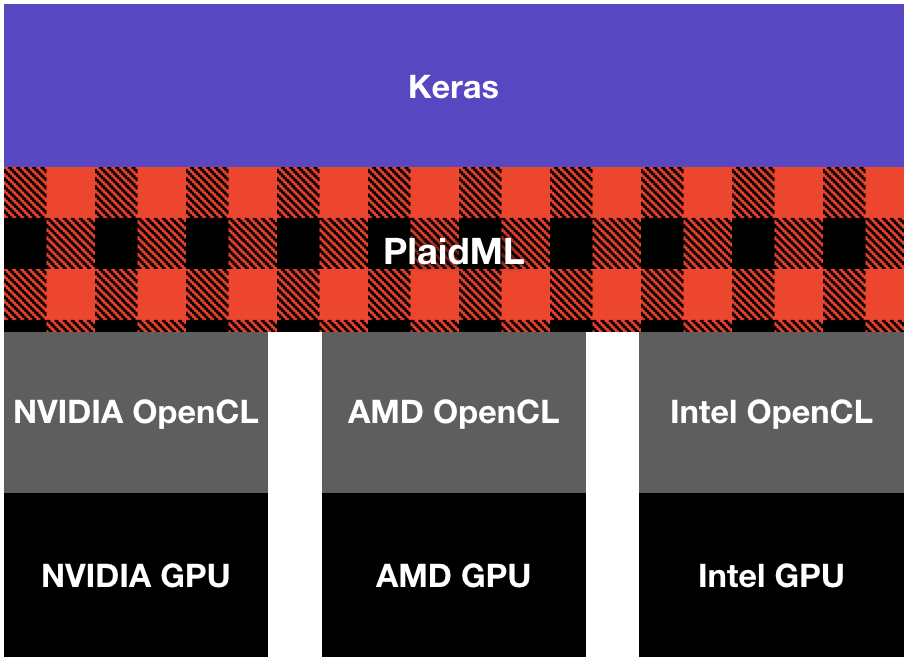

Evaluating PlaidML and GPU Support for Deep Learning on a Windows 10 Notebook | by franky | DataDrivenInvestor